Cloud Computing

Number of words: 33437

CHAPTER-1

INTRODUCTION

1.1 Cloud Computing

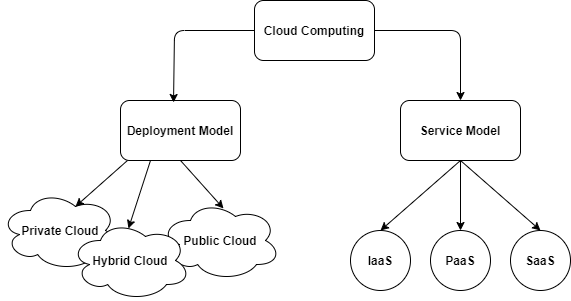

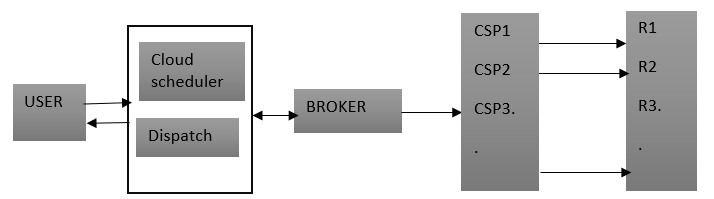

Cloud computing is a paradigm for providing on-demand for the network that is computable and also for the resources such as networks, servers, storage, applications, and services, which can quickly made available and launched with the minimized efforts of management or communication from service providers. The cloud environment is made up of five fundamental characteristics, three models of service, and four modes of deployment [1]. The topology of this cloud can be depicted as in the Fig 1.1 [2].

Fig 1.1 Cloud Computing

1.1.1 Deployment Models

Clouds come in a number of different forms, each one distinct from the others. Those are listed as below:

- Public Cloud: Anyone who wishes to use the cloud platform can do so. It might be taken, maintained, and opened up as a commercial, educational, or government institution, or a combination of these. It’s on the premises of the cloud provider.

- Private Cloud: Such platform is developed for a single entity with numerous users to use it specifically such as multiple units of business. It might be owned, administered, and directed by the company, outside business party, or a mix of the two and which can be on the site or also on the offsite areas.

- Hybrid Cloud: The cloud environment is made up of two or many separate cloud environments (private, communal, or public) that are making its operations on independent basis but are also have a link with a reliable or patented technology that allows data and application mobility (For example, cloud breaking for cloud load balancing). Due of the possibility for uniqueness in cloud scenarios and the distribution of management duties between the private organizations that are providing cloud and the providers of public cloud, hybrid models are regarded complicated and difficult to establish and maintain [3].

- Community Cloud: The community cloud is only available to a restricted set of clients from companies that share these concerns (e.g., mission, security needs, policy, and compliance issues). It might be taken, manageable or controllable by single or multiple community businesses, a third party, or a combination of the three, and it could occur on or off-site. [1].

1.2.1 Cloud Computing Services

IaaS (Infrastructure-as-a-Service), PaaS (Platform-as-a-Service), and SaaS (Software-as-a-Service) are the three most common cloud service categories (Software-as-a-Service).

- SaaS (Software-as-a-Service): The consumer has the option of utilizing cloud-based applications from the supplier. Through a variety of customer devices, applications may be accessible through a thin customer interface like an internet browser or a program interface. The client has no control or management over except for limited client explicit application settings, the hidden cloud foundation incorporates network, workers, working frameworks, stockpiling, and surprisingly specific capacities of the business in it.

- PaaS (Platform-as-a-Service): Customers or customers will be able to install consumer-built or purchased apps created on the cloud platform using the programming languages, libraries, services and tools. Consumers have no influence over the cloud infrastructure underpinning them, such as the network, servers, operating systems or storage, but manage the installed applications and perhaps the application-hosting setup settings.

- IaaS (Infrastructure-as-a-Service): For the construction and operation of arbitrary software, the client has the capacity to offer processing, storage, networks, and other essential computer resources, such as operating systems and applications. Customers have limited control over specific network components and do not manage or control the fundamental cloud infrastructure. They do, however, have control over operating systems, storage, and installed apps. (e.g. host firewalls) [1].

Virtual hosts can distribute resources across numerous guests or Virtual Machines with the aid of Virtual Machines in Cloud Computing.

1.2 Virtual Machine

A virtual machine (VM) is a virtualized computer. Virtual machine software can be used for running the programs and operational business movement, data storage, network and its connectivity, and do other activities, but it has to be updated on a regular basis and monitored. A single physical device, generally a server, can host several virtual machines that are managed by virtual machine software.

This allows computing resources (compute, storage, and network) to be allocated among these as required, resulting in increased overall performance.

1.2.1 Advantages of Virtual Machine

Virtual machines are simple to operate and maintain, and they provide a number of benefits over physical or real machines:

- “Virtual machines may run several operating systems one device, conserving the space, time, and additional expenditure to be incurred”.

- “Virtual machines let older programs run more smoothly, decrease in the expenditure of heading to the other OS”. From Linux, the operations can be headed to windows operations.

- The other operational usage of this can be the recovery from disasters and application availability.

1.2.2 Types of Virtual Machines

These are categorized into two categories: process VMs and system VMs:

- Process virtual machine: A process virtual machine hides the specifics of the underlying hardware or operating system, allowing a single host to be a single operational process for operations, offering the independent platform of development. A process VM is something like the Java Virtual Machine, which lets any OS for running its applications similar to a way as they are related to this OS or machine.

- System virtual machine:A system that is entirely virtualized A virtual machine can function in the same way as a real machine. A system platform enables several virtual machines to share the physical resources of a host computer while each operating their copy of all the OS. A hypervisor, which may run on bare hardware like VMware ESXi or ranking the position of the OS, manages this virtualization approach.

1.2.3 Cloud security challenges for virtual machines

Cloud computing security, often known as cloud security, is a collection of rules and technology that safeguard the cloud computing system’s services and resources. Cloud security is a subdomain of cyber security that covers methods for protecting cloud computing systems’ services, applications, data, virtualized IP, and related infrastructure.

Virtualized environments including virtual machines (VMs) and containers are present unique risks to cloud security. The cloud security issues posed by virtual machines can include performance problems, hardware expenses, semantic gaps, malicious software, and overall VM system security.

- Performance:

The cloud security services running on the system, hurts the VM system performance. This is due to the overhead of virtualization and inter-VM communication. Device access take up the required aspects and results exchange via cross-VM communication require extra costs of switching, which results in the increased OH of the systems.

- Hardware cost:

To ensure complete security of the virtual machines requires an efficient deal of attached resources with it. Further, using older resources or limited memory might not make the operations of the system to work systematically.

- Semantic gaps:

A problem to VM security is the semantic mismatch between the guest operating system and the underlying virtual machine monitor (VMM). Security services often require processing time to reason about a higher level of guest VM state, whereas the VMM can monitor the raw status of the guest VM.

- Malicious software:

Malicious software is another challenge for VM security. That said, VMs can be used to thwart these attacks, too. For example, various techniques are available for VM fingerprinting that can act as a honeypot for malware, such as the Agobot family of worms.

- System security:

Feature updates to cloud security services can inadvertently introduce backdoor vulnerabilities into the VM, which can then be exploited to gain access to the infrastructure as a whole.

1.2.4 Steps to protect virtualized environment

Step1: Actively monitor and update the security system

Actively monitor and analyze the hypervisor for any potential signs of compromise, and continuously audit and monitor all virtual activities. The systems must be up-to-date effective issues are made of the security system. Be sure to use the most recent hypervisor, and promptly making the application of maintenance of applications.

Step2: Implement access controls

Strong firewall controls the system and its confidentiality for making an access that is not authorized to maintain its confidentiality. Provide limited access for users to prevent modification to the hypervisor environment. Enforce strict access control and multi-factor authentication for any admin function on the hypervisor.

Step3: Separate and secure the management

To reduce the risk of VM traffic contamination, the management infrastructure should be physically separate. Above all, secure the management and VM data networks.

Step4: Use a hypervisor and disable unnecessary services

The hypervisor host management interface should be placed in a dedicated virtual network segment, only allowing access from designated subnets in the enterprise network. Guest service accounts or sessions that are not necessary should be deactivated. Disable unneeded services, such as clipboard or file sharing.

Step5: Use translation techniques and SSL Encryption

Always use network address translation techniques and Secure Sockets Layer (SSL) encryption in communication with virtual server command systems.

By spreading traffic over several network servers, a Virtual Load Balancer gives you more flexibility in balancing a server’s burden. Through virtualization, virtual load balancing attempts to imitate software-driven infrastructure. On a virtual computer, it executes the software of a physical or real load balancing device.

1.3 Load Balancing

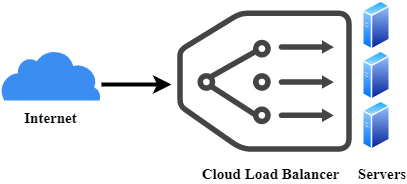

The process of dividing workloads and computational resources in a cloud computing environment is commonly known with the name of cloud load balancing. The workloads of the business can be managed by making active resource distribution on the servers and networks. Fig 1.2 [4] show the simple load balancing process.

The following are some of the most prevalent reasons for using load balancers:

- To keep the system stable.

- To boost the system’s efficiency.

- To avoid system breakdowns.

Fig 1.2 Load Balancing

1.3.1 Advantages of Cloud Load Balancing

The advantage of load balancing is listed as below:

- High Performing applications: Unlike their on-premise counterparts, cloud load balancing solutions are less costly and easier to implement. Client apps may be made to run quicker and offer better results, all while potentially saving money.

- Increased scalability: To manage website traffic, cloud balancing makes use of the cloud’s scalability and agility. You can quickly match up increasing user traffic and spread it among multiple servers or network devices by utilizing effective load balancers. It is particularly essential for ecommerce websites, which deal with thousands of users per second. They require such efficient load balancers to disperse workloads during sales or other special offers.

- Business continuity with complete flexibility: The primary aim of this balancer is to protect or save the web page from unexpected outages. At the operations when, a job is distributed over many units of servers, the task may be moved to another active node if one node fails.

1.3.2 Challenges in cloud-based load balancing

The challenges are mentioned follow:

- Virtual machine migration (time and security): Because cloud computing is a service-on-demand model, resources should be available when a service is needed. Moving resources (often virtual machines) from single server to the other servers, sometimes from a long distance, is required. In such situations, load balancing designer algorithms must look at two issues: migration time, which impacts performance and likelihood of assaults (security issue).

- “Spatially distributed nodes in a cloud: Cloud computing nodes are globally dispersed. In this instance, the difficulty is to develop load balancing algorithms to account factors such as network bandwidth, communication rates, distances between nodes and the distance between customer and resources”.

- “Single point of failure: When the node running the algorithm controller fails, this single point of failure causes the entire system to crash. The problem is to develop algorithms with distribution or decentralization.

- Algorithm complexity: The implications and the operations shall be controlled using this in the business operations. If the algorithms are complex then all these can have the negative impact over the entire operational aspects performed.

- Emergence of small data centers in cloud computing: small data centers have lesser values and use less energy than big data centres. Computing resources are thus spread worldwide. The difficulty here is to develop algorithms that balance loads for an acceptable reaction time”.

- Energy management: Algorithms for load balancing should be developed to reduce energy usage. Therefore, they should follow the approach for energy-aware planning tasks.

To perform a process and maintain a resource in a cloud computing environment, a good load balancing approach is necessary. They generate a decent schedule based on the present status of the cloud resources and do not account for resource availability changes.

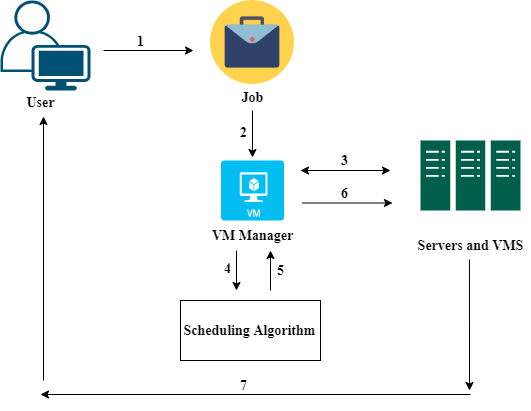

1.4 Scheduling Approaches

The act of mapping a collection of workloads to a group of virtual machines (VMs) or assigning VMs to operate on available resources in order to satisfy the demands of users is referred to as scheduling in cloud computing.

Inefficient scheduling algorithms struggle with resource excess and underuse (imbalance), leading in service degradation (in the case of overuse) or cloud resource waste (in the case of underuse) (in the case of underuse).

The fundamental idea behind scheduling is to distribute workloads (of various and complex types) across cloud resources in such a way that the process does not result in an imbalance. The scheduling algorithm should maximize response time, dependability, availability, energy consumption, cost, resource utilization, and other key performance indicator parameters. [5].

1.4.1 Scheduling Levels

There are two layers of scheduling techniques in the cloud environment:

- First level: a collection of policies for distributing VMs in a host at the host level.

- Second level: at the VM level, a collection of policies for assigning tasks to VMs.

1.4.2 Benefits of Scheduling algorithms

- Control the performance and quality of service of cloud computing.

- Manage the processor and the memory.

- The optimal scheduling algorithms make the best use of resources while reducing the total job execution time.

- Enhancing the level of fairness in all tasks.

- Increase the amount of tasks that have been completed successfully.

- Achieving a high system throughput.

- Improving load balance.

1.4.3 Task scheduling algorithms classification

Static and dynamic scheduling algorithms are the two types of scheduling algorithms. Static scheduling has a lower runtime overhead since it requires previous obtaining of needed data and pipelining of distinct task execution stages, whereas dynamic scheduling requires no prior knowledge of the job/task. As a result, the job’s execution duration is unknown, and task assignment is done as soon as the program runs [6, 7].

- Static scheduling: In comparison to dynamic scheduling, static scheduling is regarded to be relatively easy since it is dependent on prior knowledge of the system’s global state. It ignores the present state of VMs and splits all traffic equally across all VMs in the same way as round robin (RR) and random scheduling methods do.

The characteristics of static algorithms are:

- They decide based on a fixed rule, for example, input load

- They are not flexible

- They need prior knowledge about the system.

- Dynamic scheduling: Considers the present state of virtual machines without requiring prior knowledge of the system’s overall status, and distributes work based on the capability of all available virtual machines [8].

Nearly all Dynamic algorithms follow four steps:

- “Load monitoring: In this step, the load and the state of the resources are monitored

- Synchronization: In this step, the load and state information is exchanged.

- Rebalancing Criteria: It is necessary to calculate a new work distribution and then make load-balancing decisions based on this new calculation.

- Task Migration: In this step, the actual movement of the data occurs. When system decides to transfer a task or process, this step will run.

The characteristics of dynamic algorithms are:

- They decide based on the current state of the system

- They are flexible

- They improve the performance of the system”

1.4.4 Types of scheduling algorithms

- Resource Aware Scheduling Algorithm: RASA is a hybrid scheduling strategy that incorporates both the Min-min and Max-min methods. The Min-Min technique is used to finish small tasks before moving on to larger activities, whereas the Max-Min method is used to eliminate delays in big task execution. The results are exchanged in the sequential execution of a small and a big job on different resources, ignoring the waiting time of small tasks in Max-min calculations and the waiting time of large assignments in Min-min calculations. [9].

- Priority scheduling algorithm: Priority scheduling is a pre-emptive method that allows each task in the system to execute depending on its priority.”The job with the greatest priority can run first, while the job with the lowest priority can be put on hold.”FCFS order is used to schedule Equal-Priority tasks. This method has one disadvantage: it starves a process [10].

- Max-Min scheduling Algorithm: The Max-min algorithm is quite similar to the Min-min method in terms of how it works. The distinguishing characteristic is that the word minimum is substituted by maximum, and the job with the earliest completion time is assigned to the associated resource. Larger jobs are prioritized than lesser jobs [11].

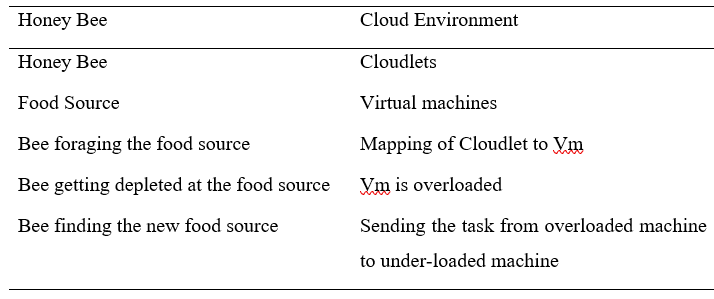

- Honey bee scheduling: This algorithm, similar to ACO and it is motivated by social agents and mimics honey bee foraging behavior to discover the best feasible solution. The flower patches, which have more nectar and pollen, are the food source here. Scout bees are a kind of bee that forages for food. They return to the hive after successfully locating the food source and begin to dance. The primary motivation for this action is to raise awareness of the food’s quality and quantity, as well as its location. In a beehive community, Forager honey bees take the Scout Bees to the food source and then begin collecting it. They then return to the hive and waggle their way to other basic honey bees in the hive, giving a consideration to the food that has been left. This leads to a further study of the path [12].

It may be used to solve both combinational and computing problems, and it can produce both generic and optimum solutions. Its goal is to distribute workloads among resources in the most efficient way possible in order to minimize overall execution time, lower execution costs, and increase cloud service performance [13].

While scheduling task to a virtual machine there is a lot of possible risk can occurred. To avoid such a problem effective risk management process is required. The primary component of success throughout the project’s execution is having a risk management plan that may assist decrease risk. Furthermore, employing an effective risk analysis approach aids risk management in obtaining a precise result that aids in making the best solution possible to prevent the risk.

1.5 Risk Management

Risk management became one of the cloud platform controls that aim to analyze and manage cloud computing risks in order to avoid them having an impact. Some high level risks are listed as below:

- Business case – The advantages and cost reductions may be exaggerated, because they do not take into account ongoing hazards and operational expenses.

- Data ownership – There’s a lot of confusion regarding who owns the data on the cloud (for example trade secrets, intellectual property, and customer details).

- Data security – Failure to implement business security standards in the cloud provider’s infrastructure and have faith in the cloud’s security safeguards.

- Sovereignty – There is a lack of information as to which nation and legal authority owns the data in the cloud.

- Assurance – Inability to get enough confidence on controls inside the cloud provider’s infrastructure.

Compliance issues, identity theft, malware infections and data leaks, diminished consumer confidence, and significant income loss are the primary security concerns of cloud computing.

1.5.1 Cloud Security Risks

Loss of Data: Natural disasters, criminal acts, or data wipes by the service provider can all result in the loss of data stored in cloud servers. Losing secret information may be disastrous for businesses, especially if they don’t have a backup strategy in place. One of the real time examples is Google. After being struck by lightning four times in its power supply cables, Google is one of the large digital companies that has lost all of its data permanently.

Malware Attacks: Cloud services may be used to steal private data. As technology advances and security measures improve, cybercriminals develop innovative methods for delivering malware to their intended targets. Attackers encrypt sensitive information and post it to YouTube as video files.

Diminished customer trust: Customers would undoubtedly feel insecure as a result of your company’s data breach issues. Millions of consumer credit and debit card details were stolen from information storage areas as a result of huge security flaws. Customers’ faith in the security of their personal information has been eroded as a result of the breaches. A data breach will undoubtedly result in a loss of clients, which will have an influence on the company’s income.

1.5.2 Managing Cloud Security

To successfully limit the security dangers posed by unmanaged cloud usage, businesses must analyze the data that is being moved to cloud servers and who is submitting the data. The processes outlined below will help corporate decision-makers and business IT managers analyze the security of their company’s data in the cloud.

Enforcing privacy Policies: Personal and sensitive data privacy and security are critical to any company’s success. Personal data kept by a corporation might be exposed due to bugs or security failures. If a cloud service provider fails to offer adequate security, the firm may consider moving cloud service providers or not keeping critical data in the cloud.

Cloud networks security: Malicious traffic should be identified and stopped during evaluations of cloud networks. Cloud service providers, on the other hand, have no idea of knowing what network traffic their customers intend to transmit and receive. After that, organizations and their service suppliers must collaborate to develop safety precautions.

Assess security vulnerabilities for cloud applications: Various kinds of data are stored on the cloud by different organizations. Depending on the type of information the company wants to protect, different aspects should be taken. Both the supplier or provider and the company face a variety of problems when it comes to cloud application safety. There are distinct concerns for both parties based on the cloud service provider’s deployment type, such as IaaS, SaaS, or PaaS.

Ensure governance and compliance is effective: To secure their assets, the number of businesses has already created privacy and compliance strategies or policies. They should also develop a governance framework that provides authority and a chain of duty inside the company, in addition to these policies. The responsibility and duties of each employee are clearly specified by a well-defined set of policies. It should also specify how they communicate and exchange data.

Service Level Agreement (SLA) breakdowns are a key concern or risk in cloud computing when utilizing cloud services. The SLA in terms of risk management is one of the topics that haven’t gotten much attention in cloud security.

1.6 SLA Violation Handling

A Service Level Agreement (SLA) is a contract among a cloud service provider and a customer that guarantees a certain level of performance.

SLA is formed at several levels, as shown below:

- Customer-based SLA: This kind of contract is utilized by individual clients and includes all the essential services that a customer may need while only utilizing one contract. It provides information about the kind and level of service accepted. For example, phone calls, messages and Internet services come under a telecommunications service, yet all of them are covered in a separate contract.

- Service-based SLA: This SLA is a contract with a same service type for all of its customers. Since the service is confined to one unchanging standard, it is easier and easier for suppliers. For example, adopting a service-based IT Helpdesk agreement would imply that the same service applies to all end-users that sign the service-based SLA.

- Multilevel SLA: This agreement is tailored to the requirements of the end user. In order to provide a more convenient service, the user integrates a number of criteria into the same system. The following sub-categories may be classified into this kind of SLA:

- Corporate level:This SLA requires no regular changes since its problems are usually unchanged. It contains an extensive explanation of all the essential elements of the agreement and applies to all end-user customers.

- Customer level:This contract addresses all service problems related to a certain client group. However, the kind of user services is not taken into account. For example, if a company asks for a stronger degree of security in one of its divisions. In this scenario, the whole business is protected by a security agency but, for particular reasons, needs a customer to be safer.

- Service level:In this agreement, all aspects attributed to a particular service regarding a customer group are included.

Only some SLA is actionable as contracts; the majority is agreements or contracts that are more akin to an Operating Level Agreement (OLA) and may not be subject to legal restrictions. Before signing a big deal with a cloud service provider, it’s essential to have an attorney check the agreements. The following are some of the parameters that are commonly specified in SLA:

- Availability of the Service

- Response time or Latency

- Each party accountability

- Warranties

1.6.1 Two major Service Level Agreements

Microsoft makes the SLA associated with the Windows Azure Platform components available, which is standard practice among cloud service providers. There is separate SLA for each component. The following are two important SLA:

- Windows Azure SLA- SLAs for computing and storage in Windows Azure are different. For computing, when a customer runs two or more role cases in distinct fault and upgrade domains, the client’s internet facing roles are guaranteed to have external connection at least 99.95 percent of the time. Furthermore, all of the customer’s role cases are analyzed, and errors in a role case’s process are guaranteed to be detected 99.9% of the time.

- SQL Azure SLA- SQL The database and internet gateway of SQL Azure will be accessible to Azure customers. Within a month, SQL Azure will be able to handle 99.9% Monthly Availability. Availability for a month, the percentage of the time the database was accessible to consumers to the entire time in a month is the proportion for a certain tenant database. In a 30-day monthly period, time is measured in minute intervals. Availability is always paid for a full month. If the customer’s efforts to access to a database are refused by the SQL Azure gateway, this period of time is reported as unavailable.

The use model is the foundation for SLA. Cloud companies frequently charge a premium for pay-per-use resources and only utilize conventional SLAs for that purpose. Customers can also pay at various levels that ensuring access to a set number of bought resources. Many times, the SLAs that come with subscriptions include a variety of terms & conditions. When a customer wants access to a certain amount of resources, he or she must subscribe to a service.

1.6.2 SLA setup criteria

A list of key criteria must be created in order to consistently construct a successful SLA.

- “Availability (e.g. 99.99% during work days, 99.9% for nights/weekends)

- Performance (e.g. maximum response times)

- Security of the data (e.g. encrypting all collected and transferred data)

- Disaster Recovery expectations (e.g. worse case recovery commitment)

- Location of the data (e.g. consistent with local legislation)

- Data accessibility (e.g. data retrievable from provider in readable format)

- Portability of the data (e.g. ability to transfer data to a distinct provider)

- The procedure for identifying problems and the timeline for resolving them (e.g. call center)

- Change Management process (e.g. changes – updates or new services)

- Dispute mediation process (e.g. escalation process, consequences)

- Exit strategy with provider expectations to enable a seamless transition”.

1.6.3 Benefits of the SLA

Some of the benefits of the service level agreement are discussed as below:

- Protects both parties. If internal IT delivers a new framework, they collaborate closely with end users to ensure that everything is functioning properly. They use emails and phone calls to follow framework success, and if there is an issue, they call the vendor to resolve it. When it comes to a corporate customer and their cloud provider, though, things aren’t quite so simple. An SLA outlines expectations and reporting so that the customer is aware of what to anticipate and who is responsible for what.

- Guarantees service level objectives. The cloud provider accepts the client’s SLOs and can demonstrate that they were met. There is a clear reaction and remedy method if there is an issue. This also safeguards the service provider. When a customer saved the money by accepting to a 48-hour data recovery window for certain of their apps, the supplier is fully justified in taking 47 hours.

- Quality of service. There is no need for the consumer to estimate or make assumptions about service levels. They receive regular information on the parameters that matter to them. If the cloud provider breaks a contract, the customer can seek redress through negotiated penalties. However these penalties will not always compensate for lost income, they can be powerful motivators when a cloud provider is paying $3,000 per day for a service outage.

The SLA violation can be predicted and rectified by various algorithms one of the helpful method is using Machine Learning technique.

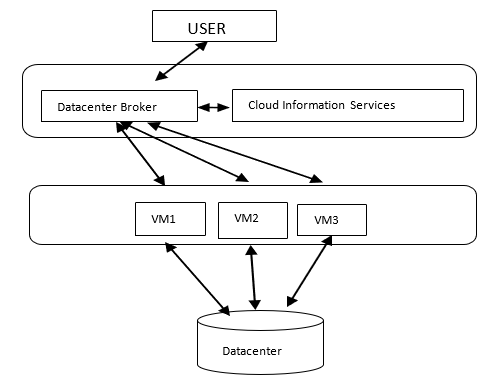

1.7 ML based Resource Allocation

In cloud computing, Resource Allocation (RA) is a method of distributing available resources to necessary cloud applications over the internet. It necessitates the type and quantity of resources that each application need to complete a user job. Machine learning (ML) is a form of artificial intelligence (AI) that allows software programs to improve their prediction accuracy without being expressly designed to do so. In order to anticipate new output values, machine learning algorithms use past data as input.

Enterprises may use machine learning in the cloud to test and launch smaller projects first, then scale up as demand and necessity grow. The pay-per-use concept has a number of advantages.

Cloud computing has had a significant influence on the Information Technology (IT) sector, and many businesses, like Google, Amazon, Microsoft, and Alibaba, are vying to deliver more powerful, dependable, and cost-effective cloud services. Furthermore, IT companies are attempting to restructure their business services in order to receive the full benefits of cloud computing. Service providers maintain cloud resources according to an on-demand pricing system in a cloud platform, and they must secure their own profitability while delivering high QoS and maximum customer happiness. As a result, resource allocation is essential in cloud computing and has an impact on QoS, overall system performance, and SLA, which measures customer satisfaction [14].

When the data is already in the cloud, using a cloud-based ML service makes perfect sense. Transferring big amounts of data is time-consuming and costly. Businesses may easily experiment with machine learning capabilities on the cloud and scale up when projects go live. The creation and implementation of algorithms that enable computers to generate behaviors based on empirical data, such as sensor information or databases, is referred to as machine learning. Automatically learning to detect complicated patterns and making intelligent judgments depending on data is a key focus of Machine Learning; The problem is that the set of all potential behaviors given all possible inputs is just too difficult to be described in a generic programming language, thus programs must, in effect, automatically explain programs.

1.7.1 Cloud Resource Management

For multi-objective optimization, cloud resource management necessitates complicated rules and judgments. Re-source management is one of the most essential components of cloud computing for IaaS. Workload estimate, job scheduling, VM consolidation, resource optimization, and energy optimization are just a few of the resource management activities that machine learning is being utilized for. There are five different types of rules:

- Load balancing

- Admission control

- Capacity allocation

- Energy conservation

- Assurances of service quality

Basic mechanism for resource Management

Rather than relying on ad hoc approaches, in cloud allocation strategies must be based on a systematic strategy. The four fundamental techniques for establishing resource management strategies in cloud computing are as follows:

- Control theory – Feedback is used in control theory to ensure system stability and estimate transient behavior, although it can only predict local behavior.

- Machine learning – Machine-learning approaches have the benefit of not requiring a system performance model. This approach might be used to coordinate the actions of several autonomous system administrators.

- Utility-based – A performance model and a method to connect user-level performance with cost are required for utility-based techniques.

- Market-oriented – It does not need any system model like conducting auctions for set of resources.

A sophisticated system with a high number of shared resources is referred to as a cloud computing platform. These are susceptible to unforeseen requests and can be influenced by circumstances outside of your control.

1.7.2 ML in cloud

There are 3 major ways in which machine learning in the cloud will act as a boon for businesses. These are:

- Cost Efficiency

The cloud has a model for pay-per-use. This removes the need for businesses to invest in heavy-duty and costly machine learning systems that are not always used daily. And this is true for most businesses because machine learning is used as a tool and not as a modus operandi.

Fig 1.3 Benefits of cloud with ML [113]

If AI or machine learning were to increase workloads, the pay-per-sec approach of the cloud would be convenient to assist businesses reduce expenses. GPUs can utilize their power without investing in high-cost equipment. Cloud machine learning allows inexpensive data storage and improves the cost-effectiveness of this technology.

- No special expertise required

Only 28 percent of businesses have expertise with AI or machine learning, according to Tech Pro study. The need for machine learning is growing and machine learning’s future scope is promising. Artificial intelligence features may be deployed using Google Cloud Platform, Microsoft Azure and AWS without needing a deep or specialized expertise. The SDKs and APIs are already available to natively integrate machine learning functions.

- Easy to scale up

When a business experiments with machine learning and its possibilities, it makes no sense for it to go completely, to complete it just at first. Using cloud-based machine learning, companies may initially test and deploy smaller projects on the cloud, then grow demand and requirement. The pay-per-use approach makes it easier to access more powerful features without the requirement for more advanced gear.

1.7.3 Advantages of ML in the cloud

- “Companies/enterprises may use the cloud to experiment with machine learning technology and scale up as needed as projects go live and demand grows.

- Companies who want to employ machine learning for their business but don’t want to invest a lot of money will find the pay-per-use model of cloud platforms to be an inexpensive option.

- To access and use numerous ML functions in the cloud, you don’t need sophisticated Data Science expertise”.

Workload estimate, job scheduling, VM consolidation, resource optimization, and energy optimization are only some of the resource management activities that machine learning is being utilized for.

1.8 Organization of Thesis

Chapter 2 devises the review of existing literature and their comparative analysis. Chapter 3 discusses a load balancing-based hyper heuristic method for work scheduling in a cloud setting. In Chapter 4, we looked at how to use a risk management framework in the cloud to implement a SLA-aware load balancing approach. In chapter 5, an inside view is made on how Honey bee optimized hyper heuristic algorithm and SLA-Aware Risk Management Framework are efficient than other techniques followed by chapter 6 summed up the research with its concluding points and future scope of the work.

CHAPTER-2

LITERATURE SURVEY

This chapter is discussed the survey about the cloud computing environment and virtual machine process. It is also studied about the load balancing process and scheduling approaches for improve the system performance and to protect the system against failure. A risk management topic also discussed here which is used to predict the risk and rectified while achieving the better task scheduling process and good load balancing technique. One of the major risks is SLA violation handling also discussed here and resource allocation to the virtual machine with the help of the machine learning concept also presented here.

2.1 Cloud Computing

The authors [15] proposed a unified Cloud service measuring index in order to give a single, complete methodology for multi-level Cloud service assessment. They defined 8 top-level Cloud service qualities and 65 specific key performance metrics for evaluating these qualities for a comprehensive and effective performance review. They used ‘‘Multi-Attribute Global Inference of Quality for a good analytical rating of the targeted Cloud services, which took into account the hierarchical connection of performance factors. This approach takes into account user’s needs for Cloud service attributes in terms of attribute weights and allows you to pick all or just the ones you want.

Authors in [16] developed a reliable service composition discovery technique for meeting user needs in a cloud context, including behavioral restrictions. They proposed a service composition discovery technique after designing a global service composition discovery framework depending on semantic connections between characteristics. Then they came up with a mechanism for identifying behavioral consistency. This technique significantly enhances user anticipated behavior and service composition consistency, making the service composition identified by this technique in cloud computing more reliable.

The study [17] used S-AlexNet Convolutional Neural Networks and Dynamic Game Theory to develop a security reputation model (SCNN-DGT). It’s also utilized in the Internet of Things (IoT) to secure the confidentiality of health data. With the help of the S-AlexNet convolutional neural network, the text data about user health data is first pre-classified. Then, depending on dynamic game theory, a suggestion incentive approach is proposed. The suggested work improves the dependability of mobile terminals while also strengthening the data security and privacy safety of mobile cloud services.

The study [18] proposed a Reliable Trust Computing Mechanism (RTCM) focused on multisource feedback and fog computing fusion. To improve the identification of malicious feedback nodes, a new measure for social sensor node trust is created, and multisource feedback trust values are collected at the sensing layer. Furthermore, the fog computing devices gather the sensing layer’s trust feedback information and conduct the recommendation trust calculation, that reducing communication time and computation overhead. Finally, a fusion algorithm is used to combine multiple forms of feedback trust values, overcoming the artificial weighting and subjective weighting limitations of previous trust methods.

For the screening of upstream Advanced Metering Infrastructure (AMI) traffic, the authors of [19] suggested a cloud-centric collaborative security service model. It also provides a service placement strategy for the proposed framework that is collaboration-aware. A quadratic assignment issue was devised as part of the placement strategy to reduce delay.

The author [20] conducted a quantitative assessment of security investments’ effects on security protocols and service availability. This analysis yields the best security expenditure for service availability and the smallest full service availability investment criterion. Finally, the relationship between them is investigated in order to help cloud computing providers choose the optimal strategy for integrating service and security efforts.

“Priority-aware resource allocation algorithms that account both host and network resources were proposed by the study’s authors [21].To decrease the possibility of network congestion caused by other tenants, the Priority-Aware VM Allocation (PAVA) algorithm allocates VMs of the high-priority application on closely linked hosts. Bandwidth allocation with a setup of priority queues for each networking device in a data center network handled by a Software-Defined Networking (SDN) controller also ensures the needed bandwidth for a vital application. In a multi-tenant cloud data center, the suggested techniques can distribute adequate resources for high-priority applications to fulfill the application’s QoS demand”.

The study [22] proposed a new trust evaluation paradigm for cloud service security and reputation. This model combines security- and reputation-based trust analysis methodologies to support the evaluation of cloud services in order to assure the security of cloud-based IoT contexts. To analyze the security of a cloud service, the security-based trust evaluation approach uses cloud-specific security measures. In addition, the reputation-based trust evaluation technique uses feedback rankings on cloud service quality to estimate a cloud service’s reputation.

The author [23] presented an ad-hoc mobile edge cloud that leverages Wi-Fi Direct to link neighboring mobile devices, exchange resources, and integrate security services. The proposed technique combines a multi-objective resource-aware optimization method with a genetic-based methodology to allow intelligent offloading decisions using adaptive profiling of contextual and statistical data from ad-hoc mobile edge cloud devices. To minimize energy consumption and processing time, the proposed solution employs a combination of components that rely on profiling, multi-objective optimization techniques, and heuristics.

Authors in [24] designed a novel multi-layered cloud-based approach. This approach is intended to simplify the administration of underlying resources and to create fast flexibility, allowing end users to access limitless computing capacity; it takes into account energy usage, security, and multi-user availability, scalability, and deployment concerns. To decrease the use of computer resources, an energy-saving technique is employed. To secure sensitive data and prevent hostile assaults in the cloud, security components have been incorporated.

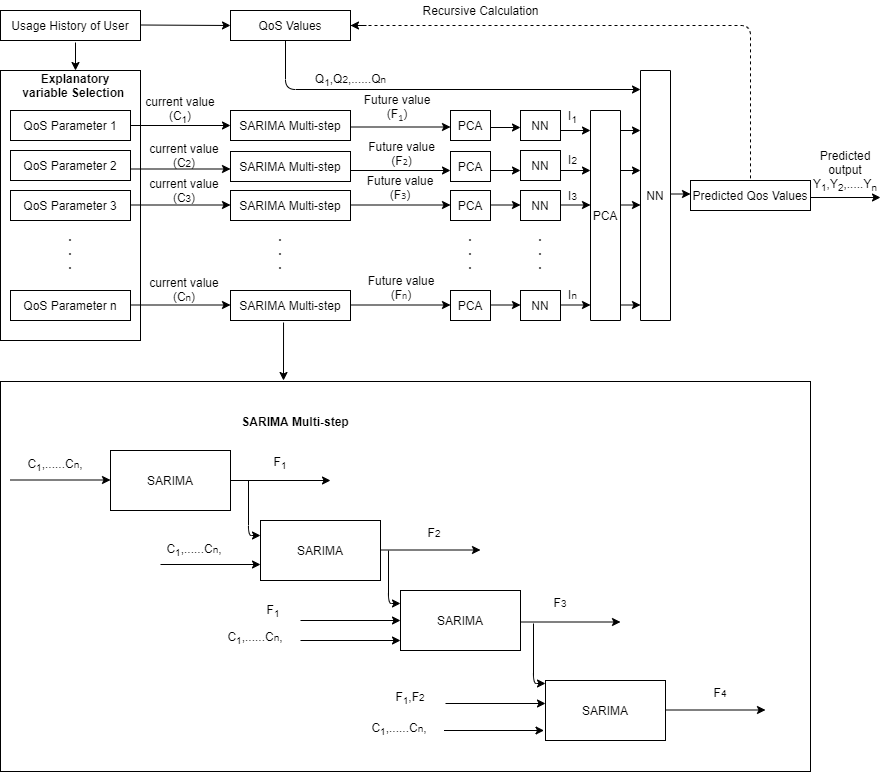

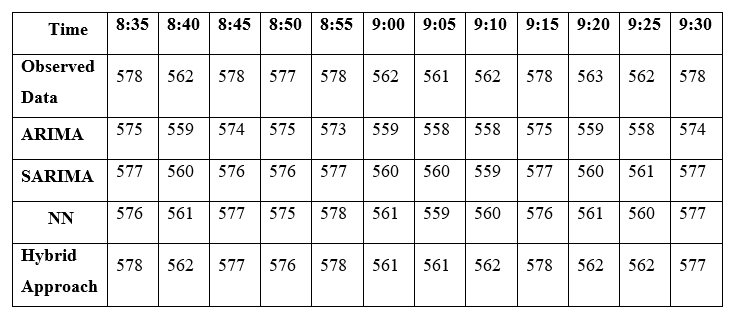

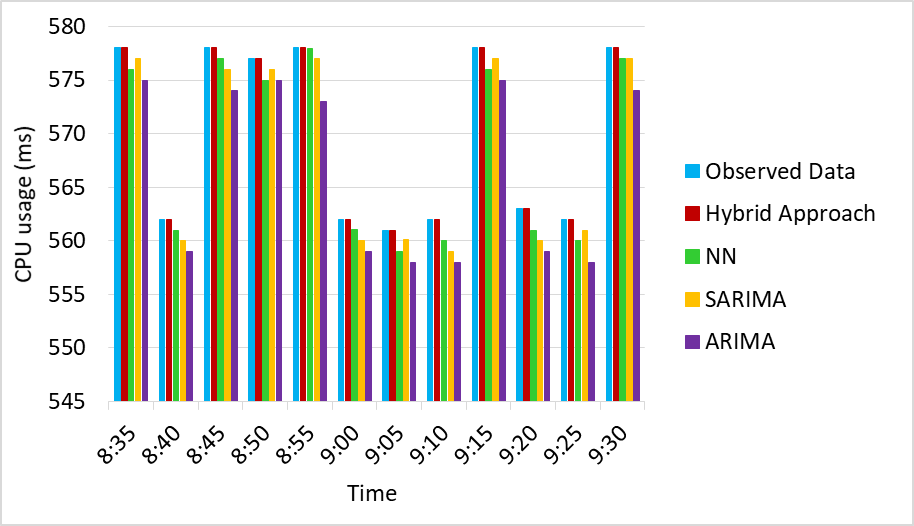

The authors of the study [25] recommended using descriptive values to forecast multi-step ahead wind speed using an S along with Seasonal Autoregressive Integrated Moving Average (SARIMA) based hybrid method. The explanatory factors are calculated first, and a wind speed forecast is made. Recursively, they were able to get this multi-step forward forecasting.

Authors in [26] proposed a neural network approach called Neural Decomposition (ND) for analyzing and extrapolating output timings. By executing a trial for Fourier-like decomposition into sequences of sinusoids, joined by blocks with non-periodic functions that are active, blocks having a sinusoidal activation system were used to discover linear patterns as well as other non-periodic components.

The study [98] introduced a hybrid cryptography method that uses encryption to efficiently transfer data over the cloud. To create the hybrid encryption algorithm, more than one algorithm was combined. The user can select the plain text data that needs to be encrypted using the hybrid cryptographic method.To protect data saved in the cloud, the suggested hybrid cryptography algorithms include RSA (Rivest Shamir Adleman) and AES (Advance Encryption Standard).

Authors in [102] proposed a unique paradigm for Network Traffic assessment in Cloud platform. The network traffic data is gathered in the cloud and stored in a cloud database, where a machine learning system is created. In a cloud computing environment, network traffic data is sent to a classification or clustering machine based on whether it is labelled or unlabelled. The system may be controlled remotely and has a scalable architecture.

2.2 Virtual Machine

The study [27] presented a method to enhance the energy efficiency of cloud data centers namely, a Virtual Machine Consolidation method with Multiple Usage Prediction (VMCUP-M).Multiple usages in this sense refer to both resource kinds and the time range used to forecast future usage. According to the local history of the evaluated servers, this method is run during the virtual machine consolidation stage to predict the long-term usage of different resource categories. The combination of present and anticipated resource usage enables accurate classification of overloaded and under-loaded servers, resulting in lower load and power usage following consolidation.

The study [28] created a load detection method for hosts in order to prevent rapid VM migration by determining the future condition of over-utilized/under-utilized hosts. They also proposed a virtual machine placement approach for establishing a group of host candidates obtaining migrated VMs in order to reduce VM transformations in the future.

The author [29] proposed a lower complexity Multi-Population Ant Colony System method with the Extreme Learning Machine (ELM) prediction (ELM_MPACS). The method used ELM to forecast the host state, and then transferred the virtual machine on the overloaded host to the normal host, while consolidating the virtual machine on the under-loaded host to another under-loaded host with greater utilization. Multiple populations create migration plans at the same time, and local search improves each population’s findings to decrease SLA violations.

The authors of [30] proposed altering a nova scheduler to enhance application performance in terms of execution time and processor usage by using a multi-resource VM placement technique.

To address the security risks to VMs during their whole lifespan, the study [31] presented a unique VM lifecycle security protection paradigm based on trusted computing. A notion of the VM lifespan is provided, which is split into the various active states of the VM. Then, a proper security framework based on trusted computing is created, which may expand the trusted relationship from the trusted platform module to the VM and safeguard the VM’s security and dependability throughout its lifespan.

The study [32] proposed a virtual machine placement technique and also a host overload / under-load recognition method for energy savings and SLA-aware virtual machine consolidation in cloud data centers, based on their recommended reliable, simple linear regression model of regression. Unlike traditional linear regression, the proposed methods alter an estimate and squint at over-prediction simultaneously adding the error to the forecast.

The authors of [33] suggested an approach based on the Energy-Aware concept for VM migration in automotive clouds. They also suggested a new VM migration strategy depend on the energy costs of migration, taking into account the energy costs of VMs in the cloud and cloudlets, including the energy costs of communication among cloudlets, clouds, and vehicles. It also resulted in a 5% decrease in VM drop migrations.

A virtual machine deployment selection method is proposed focused on a double-cursor control mechanism for the resource consumption status of Processor and memory was given in the study [34], which achieved a binocular optimization balance to some extent. The suggested method is utilized to increase cloud data center resource utilization, lower virtual machine migration rates, and minimize physical resource energy usage.

The study [35] proposed a technique for multi-objective resource allocation for those VMs based on Euclidean distance, as well as a data center migration strategy for VMs. HGAPSO, a hybrid technique that combines Genetic Algorithm (GA) and Particle Swarm Optimization (PSO), which allocates VMs to Physical Machines (PMs).Not only does the recommended resource management based on HGAPSO and virtual machine transfer minimize power and resource waste. As a result, there are no SLA violations in the cloud data center [36].

The author [37] suggested a Global Virtual-Time Fair Scheduler (GVTS), which ensures global virtual time fairness for threads and thread groups, even when they operate across multiple physical cores. The hierarchical enforcement of destination virtual time is used by this novel scheduler to improve the scalability of schedulers that are knowledgeable of the topology of CPU organizations.

The study [38] suggested virtual machine (VM) trading techniques in which idle Reserved Instance (RI) of Service Providers (SPs) with VM requirement less than the number of contractual RI are moved to SPs with VM requirement more than the number of contractual RI. They looked into two techniques as VM trading frameworks: RI with Self-help Effort (RISE) and RI with Mutual Aid (RIMA). The suggested virtual machine trading strategies reduced the number of virtual machines needed for On-Demand Instances (ODI).

The authors of [39] developed a dynamic reconfiguration system named Inter-Cloud Load Balancer (ICLB) that permits scaling up and down of virtual resources while avoiding service outages and communication problems. It comprises an inter-cloud load balancer that distributes incoming user HTTP (Hypertext Transfer Protocol) traffic over various instances of inter-cloud applications and services, as well as dynamic resource reconfiguration to meet real-time needs.

The authors of [99] presented methods for power-aware virtual machine scheduling and migration. The power savings are obtained by consolidating virtual machines onto a smaller number of servers and placing idle nodes into sleep mode. The distribution and consolidation of virtual machines on a server is dependent on server efficiency, i.e. minimizing energy usage while maximizing utilization.

The research [122] researched a virtual slice environment-aware paradigm that improves energy efficiency and addresses intermittent renewable energy sources. The virtual slice is an optimum flux allocated to virtual machines in a virtual data center taking into consideration traffic, VM locations, network physical capacity and the availability of renewable energy. They developed and then suggested an optimum solution for the issue of virtual slice assignment using different cloud consolidation methods.

The study [123] presented CloudNet’s cloud computing architecture connected with a virtual private network (VPN) infrastructure to offer smooth and safe connection between company and cloud data center locations. CloudNet offers improved support for the live WAN transfer of virtual machines in order to fulfill its goal of effectively combining geographically dispersed data center resources. In particular, they introduced a number of optimisms that reduce the costs of transferring storage and virtual machine memory over low bandwidth and high-latency internet connections during migration.

The author of [124] proposed a new method to best address the issue of host overload detection for any known fixed workloads and a given state configuration by maximizing the average time intermigration under the defined QoS model based on the Markov chain model. They heuristically adjust the method for uncertain, non-static workloads using a technique used to estimate multisize sliding windows.

The research [125] a framework suggested, called CoTuner, for coordinated VM and resident apps setting. The core of the framework is a model-free hybrid strengthening learning technique that combines the benefits of the Simplex method and the RL method and is further improved by the use of system-wide exploration policy. Experimental findings using TPC-W and TPC-C benchmarks in Xen-based, virtualized settings show that CoTuner can conduct a virtual server cluster in an optimum or nearly optimal flight configuration status in response to changing workloads.

2.3 Load Balancing

A Dynamic Load-Balanced Scheduling (DLBS) technique was proposed in the study [40] to utilize network efficiency when dynamically controlling the workload. They presented the DLBS task and created a series of successful heuristic scheduling algorithms for both traditional Open Flow network approaches that preserve data flow by each time slot.

The author [41] presented a Resource Aware Load Balancing Algorithm (RALBA) to ensure task distribution while maintaining balance based on VM capabilities estimation. The RABLA technique has two actions: (i) VMs are scheduled depending on their computational capability, and (ii) task mapping is done by picking the VM with the earliest completion time. RALBA, on the other hand, does not help Cloud tasks with SLA-aware scheduling. As a result, resource-based SLAs and time-limited cloudlets may not be well-prepared.

Authors in [42] conducted a study of the difficulties and drawbacks associated with present load balancing approaches in order to build a high-efficiency technique. They gave a thorough examination of the many types of load balancing, scheduling, and task-based load balancing methods used in the cloud.

The authors of [43] conducted a meta-analysis of published load balancing methods that were achieved through server consolidation. Server consolidation-based load balancing improves resource efficiency and can help data centers enhance their QoS metrics while also expanding their applications. The study [44] provided a load-balancing approach based on the ACO algorithm. The parameters of ant colony function are improved using this method.

The study [45] provided a comprehensive overview of current VM transformations, as well as techniques for determining which policies to use based on the advantages and drawbacks. They offered computational methods and their scopes with many elements such as SLA results degradation, VM migrations, and so on.

The study’s authors [100] presented a method for balancing the demand on storage servers while maximizing server capabilities and resources. It decreases the number of requests that are delayed, as well as the total response time of the system. It also takes into account the physical characteristics of a server, such as the number of Processor cores available, the size of the request queue, and the buffer used to hold incoming customer requests.

A novel Trust and Packet load Balancing based Opportunistic outing (TPBOR) protocol was described in the study [101].By using trustworthy nodes in the routing operation, the proposed approach is energy efficient as well as safe. In addition, the suggested protocol balances network traffic and evenly distributes traffic load across the network.

The study [114] introducing a load balancing and cloud application scaling energy-aware operating paradigm. The fundamental idea of their approach is to define an energy-friendly operating regime and to increase the number of servers in this system. Idle and lightly loaded servers are moved to one of the sleeping modes for energy conservation.

The research [115] offered a time and space efficiency adaptive method in a heterogeneous cloud. In order to improve the execution time of the map phase, a dynamic speculative execution approach is described and a prediction template is utilized for quickly predicting task performance times. A multi-objective optimization method combines the prediction model with the adaptive solution to improve space-time performance.

The study [116] the compromise between electricity usage and latency in transmission in the fog cloud computing system was studied. They developed a workload allocation problem which indicates the optimum distribution of the task between fog and the cloud towards minimum energy consumption with a restricted service time. The issue is addressed using an approximation method by dividing the primary problem into three subsystem subproblems that may be handled accordingly.

The authors in [117] Proposed a new architecture and two unified load balance algorithms. Current research demonstrates that their methods have a low computing cost, need relaxed precision in the estimate of the power price, and ensure user demands a service completion times. Compared to plans which use either geographical load balancing or temporal load balancing alone, thorough assessments show that the proposed spatial-temporal load balance approach substantially lowers distributed IDC energy costs (Internet Data Centers).

The authors of [118] Suggest a Cloud-hosted SIP (Session Initiation Protocol) Virtual Load Balanced Call Admission Controller (VLB-CAC). VLB-CAC calculates the optimum call admission rates and signal routes for admitted calls and also optimizes the assignment of SIP server CPU and memory resources. A novel linear programming model derives this optimum solution. This model needs some essential SIP server information as an input. VLB-CAC also has an autoscaler to address resource constraints. The suggested system is implemented as a virtual test bed in Smart Applications on Virtual Infrastructure (SAVI).

The research [119] Proposed inter-domain service transfer decision system to balance computational demands across different cloud domains. The system’s objective is to maximize the benefits for both the cloud system and users by reducing the number of service refusals, which substantially decrease the level of user happiness. To this purpose, they construct the decision-making process for service requests as a semi-Markov decision process. Optimal choices on service transfer are achieved by taking system revenues and expenditures together.

The authors of the study [120] Proposes iAware, a lightweight VM live migration method that is aware of interference. It experimentally captures the fundamental connections between VM performance interference and key variables which are accessible in practical terms via actual benchmarking tests on Xen virtualized cluster architecture. iAware jointly estimates and reduces migration and interference with co-location across VMs by developing a simple demand-supply multi-resource model.

The research [121] proposed a virtualization architecture considering the issue of load balancing. The suggested framework benefits from the flourishing use of the DVS and the flourishing usage of OpenFlow protocols. First, the system adapts diverse patterns of network communication by allowing arbitrary traffic matrices between VMs in virtually private clouds (VPCs). The sole limitation of network flows is that the network interface of a server is bandwidth. Second, the framework provides load balancing using a sophisticated connection creation mechanism. The program takes the bare metal data center designs and the changing network environment as input and adapts an international overlap on each connection. Finally, the suggested framework focuses on the design of the fat tree, which is extensively utilized in today’s data centres.

2.4 Scheduling Approaches

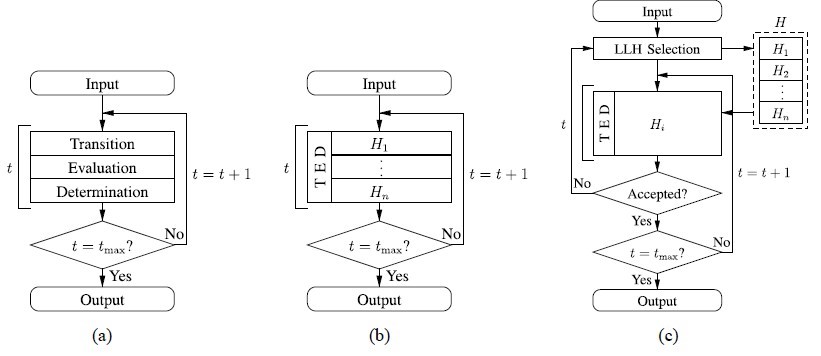

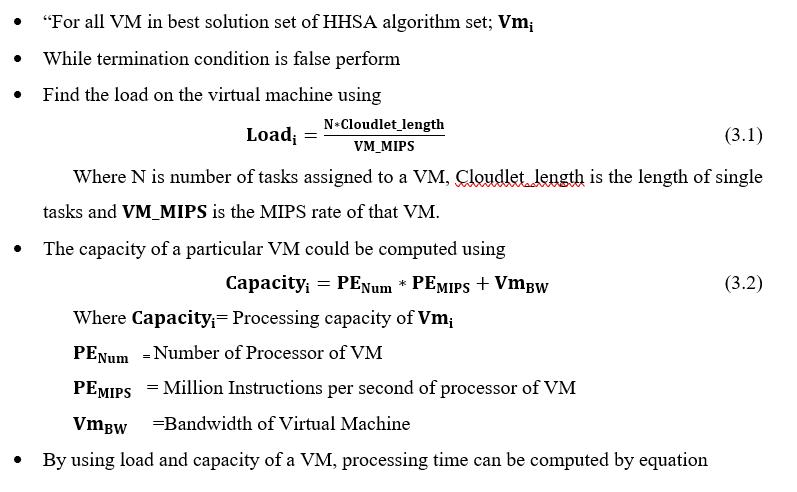

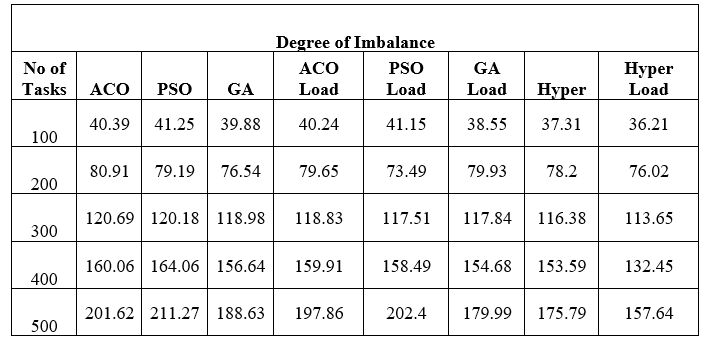

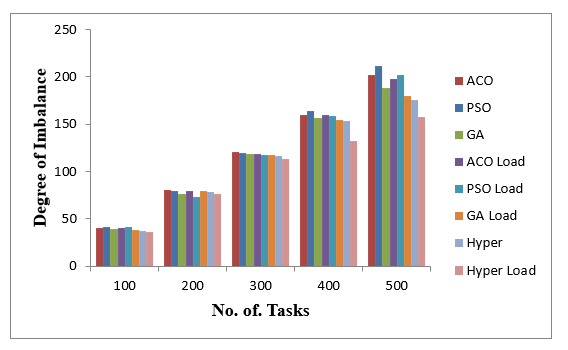

A Hyper-Heuristic Scheduling Algorithm (HHSA) was given in the research [46]. This method reduces the time it takes to schedule jobs. The ACO computation was given by the study’s authors [47] for completing distributed computing undertaking booking. The study [48] described a cloud-based remedy to the issue of job scheduling. A LAGA (Load Balance Aware Genetic Algorithm) is used in combination with min-min and max-min methods in this method [49].

The authors of [50] devised a dynamic task scheduling strategy based on three swarm intelligence approaches: ACO, ABC, and PSO, in order to reduce the time it takes to complete a given set of jobs. Authors in [51] designed a system centered on a Genetic algorithm for job scheduling with the goal of lowering total schedule makespan time and increasing resource utilization. The research [97] suggested a novel round robin scheduling technique that helps to increase CPU efficiency.

Work planning for cloud registration is investigated in the research [52].After conducting study and analysis on the subject, the team decides on a project that will take the shortest amount of time to complete and will cost the least amount of money. The research [53] focused on a few of the most important work process planning approaches. It provides a complete review of such distributed computing approaches, as well as a point-by-point grouping of them.

The research [54] presented the Ant Colony Optimization (ACO) based Load Balancing (LBACO) method was used to develop a job scheduling solution for cloud computing environments. This approach shortens the time it takes for tasks to complete by balancing the system’s load.

To overcome the scheduling problem, the authors of [55] devised an improved ant colony approach. Constraint functions are used to modify the nature of the scheduling arrangements in a practical way with a specific end goal in mind: to get the best possible results.

The author [56] addressed a scheduling method that incorporates a genetic algorithm, as well as min-min and max-min, and demonstrates how min-min and max-min are presented. The authors of the study [48] proposed a unique scheduling technique that enhances the fundamental particle swarm optimization (PSO) algorithm and categorizes fitness values using a self-adapting inertia weight and mutation process.

The study [57] concentrated on the problem of job security planning in a distributed computing environment. The authors of [58] presented an algorithm that successfully schedules jobs to the right servers while lowering makespan time and achieving improved load balancing outcomes.

The author [59] presented an efficient task scheduling method based on Particle Swarm Optimization and a chaotic perturbation technique, which results in a quicker convergence time and a shorter makespan time.

The study [60] focused on a scheduling method based on particle swarm optimization (PSO) that takes into account both current load and transmission cost and uses an inertia weight to effectively execute local and global searches while avoiding local search sinking. To minimize makespan and improve resource usage, the study [61] used a hybrid approach based on particle swarm optimization (PSO).

Authors in [62] the HABC method, which is a Heuristic Task Scheduling with Artificial Bee Colony algorithm for virtual machines in heterogeneous Cloud environment. It’s a new job scheduling and load balancing method for virtual machines in diverse settings, with the goal of reducing the system’s makespan time. Even when the number of jobs is increased and various types of information are dispersed, the suggested technique reduces the makespan.

The authors of [103] conducted a comparative analysis of several methods for their rationality, feasibility, and adaptability in a cloud scenario, after which they attempted to suggest a crossover method that might be used to enhance the present stage. With the objective of encouraging the cloud providers to improves their service quality.

The study [126] proposed a resource provisioning and scheduling strategy for scientific workflows on Infrastructure as a Service clouds. They presented an algorithm based on the meta-heuristic optimization technique, particle swarm optimization, which aims to minimize the overall workflow execution cost while meeting deadline constraints.

Authors in [127] proposed a novel rolling-horizon scheduling architecture for real-time task scheduling in virtualized clouds. Then a task-oriented energy consumption model is given and analyzed. Based on their scheduling architecture, they develop a novel energy-aware scheduling algorithm named EARH for real-time, aperiodic, independent tasks. The EARH employs a rolling-horizon optimization policy and can also be extended to integrate other energy-aware scheduling algorithms. Furthermore, they proposed two strategies in terms of resource scaling up and scaling down to make a good trade-off between task’s schedulability and energy conservation.

The authors of the study [128] presented two key contributions. (1) They proposed an autonomous synchronization-aware VM scheduling (SVS) algorithm, which can effectively mitigate the performance degradation of tightly-coupled parallel applications running atop them in over-committed situation. (2) They integrate the SVS algorithm into Xen VMM scheduler, and rigorously implement a prototype.

The research [129] introduced PRISM, a fine-grained resource-aware Map-Reduce scheduler that divides tasks into phases, where each phase has a constant resource usage profile, and performs scheduling at the phase level. They first demonstrated the importance of phase-level scheduling by showing the resource usage variability within the lifetime of a task using a wide-range of Map–Reduce jobs. They then presented a phase-level scheduling algorithm that improves execution parallelism and resource utilization without introducing stragglers.

The authors in [133] proposed an elastic resource provisioning mechanism in the fault-tolerant context to improve the resource utilization. On the basis of the fault-tolerant mechanism and the elastic resource provisioning mechanism, they designed a novel fault-tolerant elastic scheduling algorithms for real-time tasks in clouds named FESTAL, aiming at achieving both fault tolerance and high resource utilization in clouds.

The study [134] proposed avGASA, a virtualized GPU resource adaptive scheduling algorithm in cloud gaming. vGASA interposes scheduling algorithms in the graphics API of the operating system, and hence the host graphic driver or the guest operating system remains unmodified. To fulfill the service level agreement as well as maximize GPU usage, they proposed three adaptive scheduling algorithms featuring feedback control that mitigates the impact of the runtime uncertainties on the system performance.

2.5 Risk Management

The study [63] proposed a threat-specific risk assessment process that takes into account multiple cloud security attributes (e.g., vulnerability data, attack possibility, and effect of every attack related with the recognized threat(s)) but also client-specific cloud security demands. It enables a cloud provider’s security controller to make good judgments about mitigation techniques to safeguard particular customers’ outsourced computing assets from specific threats depending on their specific security demands. The suggested technique is not restricted to cloud-based systems; it may be readily applied to other networked systems as well.

The authors of [64] suggested an optimum security risk management approach to comprehensively limit the risks of a DoS (Denial of Service) attack and SLA breaches that might occur in the 5G edge-cloud ecosystem. A cyber risk-aware controller is developed through using Semi-Markov Decision Process architecture to determine the best admission, placement, and migration of a service while taking user taxonomy and service needs into account. To prepare the way for a secure edge-cloud process, a novel cost model is presented that balances the intended security risks, but also the cost and benefit of a secure service delivery.

The study [65] concentrated on a specific element of risk assessment in cloud computing: techniques within an approach that cloud service providers and consumers may use to assess risk throughout service development and operation. They highlighted the different stages of the service lifecycle during which risk assessment occurs, as well as the risk models that have been created and applied.

A Secret Sharing Group Key management protocol (SSGK) was suggested in the study [66] to safeguard the process of communication and exchanged data from unwanted access. The shared data is encrypted using a group key, and the group key is distributed using a secret sharing method in SSGK. The suggested approach significantly reduces the security and privacy concerns associated with data sharing in cloud storage while also saving storage space.

The study’s authors [67] proposed a decentralized and trustworthy Mobile Device Cloud infrastructure based on BlockChain (BC-MDC).By integrating a plasma-based blockchain into the MDC, BC-MDC facilitates decentralization and avoids dishonesty. They created four clever contracts to manage worker registration, task posting/allocation, rewarding, and punishing in a distributed manner.MDC job allocation is sometimes described as a stochastic optimization issue that minimizes both the long-term processing cost and the probability of task failure. The suggested method has a cheap cost of use and a high degree of accuracy.

Under server-side assaults, the study [68] proposed a Risk-aware Computation Offloading (RCO) mechanism to spread computation workloads safely across geographically scattered edge sites.RCO considers prospective attackers’ strategic actions in the edge system and strikes the right balance among risk management and service latency reduction. The RCO problem is formulated using the Bayesian Stackelberg game, which specifies an acceptable relationship among the edge system and the attacker. The Bayesian Stackelberg game, in especially, reflects the unpredictability of attacker behavior and allows RCO to operate even if the edge system does not know who it is up against.

Authors in [69] suggested a new Cloud Security Risk Management Framework (CSRMF) to aid businesses embracing Cloud Computing (CC) in identifying, analyzing, evaluating, and mitigating security threats in their Cloud infrastructures. The CSRMF, unlike standard risk management frameworks, is inspired by the companies’ business objectives. It enables any company using CC to be knowledgeable of cloud security threats and match low-level management actions with high-level business goals. It is intended to address the effects of cloud-specific security threats on a company’s business objectives. As a result, companies may undertake a cost-benefit analysis of CC technology adoption and obtain a sufficient degree of trust in Cloud technology. Cloud Service Providers (CSPs), on the other hand, may enhance productivity and profitability by controlling cloud-related threats.

The study [130] Presented new disaster-aware data center placement and Content Management methods in cloud networks which may minimize such loss by avoiding installation in disaster-induced places. You initially address a static disaster-conscious data center and content placing issue by adopting a linear integer program with the aim of minimizing the risk of content loss. They then developed an algorithm for catastrophe conscious dynamic content management that could adapt the current placement to dynamic circumstances. In this method, while lowering the total risk and making the network aware of disasters, reducing the use of network resources and meeting quality of service standards may also be accomplished.

The study [131] proposed the Cloud Compute Commodity (C3) Cloud Abacus pricing framework to suit both parties. They utilize financial option theory ideas and algorithms to create Clabacus. They developed a generic formula known as the Compound-Moores law that identifies technical advancements in resources, inflation and depreciation rates and so on. They link these Cloud parameters to price parameters, so that the Pricing Algorithm option may be effectively modified to calculate the Cloud resource price. Using financial-at-risk (VaR) analysis, the calculated resource pricing is adjusted to reflect the cloud provider’s inherent hazards. They presented fluctuating logic and evolutionary algorithm methods to calculate the supplier’s VaR resources.

The research [132] proposed a new data hosting system (CHARM name) integrating two major required functionalities. The first is to choose many acceptable clouds and a suitable redundancy method to store data with minimized money costs and assured available availability. Second, a transition process is initiated to distribute data in accordance with changes in data access patterns and cloud prices.

2.6 SLA violation Handling

The author [70] introduced SLA-aware autonomic Technique for Allocation of Resources (STAR), a SLA-aware autonomic resource management technique aimed at lowering SLA violation rates for effective cloud service delivery. STAR’s major goal is to lower the number of SLA violations and increase customer happiness by meeting their QoS standards. STAR also looked at other QoS factors including completion time, cost, delay, dependability, and availability to see how these affected SLA violation rates. However, energy efficiency, attack detection rate, resource usage and resource contention, scalability, and other factors were not taken into account.

The study [71 & 72] created a successful overbooking model while also acknowledging the necessity for best solution. They discovered that using the optimal strategy, the recommended overbooking technique will increase a federated company’s profits while lowering the chance of SLA violation. If a provider’s ability is reasonably high, and the provider uses the proposed overbooking technique frequently, the provider’s SLA breach may be denied and the provider’s profit maximized.

The authors of [73] introduced ATEA (Adaptive Three-threshold Energy-Aware algorithm), a novel virtual machine (VM) allocation method that makes effective use of previous data from VM resource use. It’s a new virtual machine deployment technique that combines an adaptive three-threshold approach with VM selection rules. Dynamic thresholds consume less energy than fixed thresholds. It effectively cuts down on energy usage and SLA violations.

The authors of the study [74] proposed the Balanced Resource Consumption (BRC) and Imbalance VM with Minimum Migration Time (IBMMT). The BRC-IBMMT approach is a balanced resource consumption approach that aims to optimize resource consumption efficiency while keeping a fair balance among conflicting energy consumption correlation goals and SLA breaches.

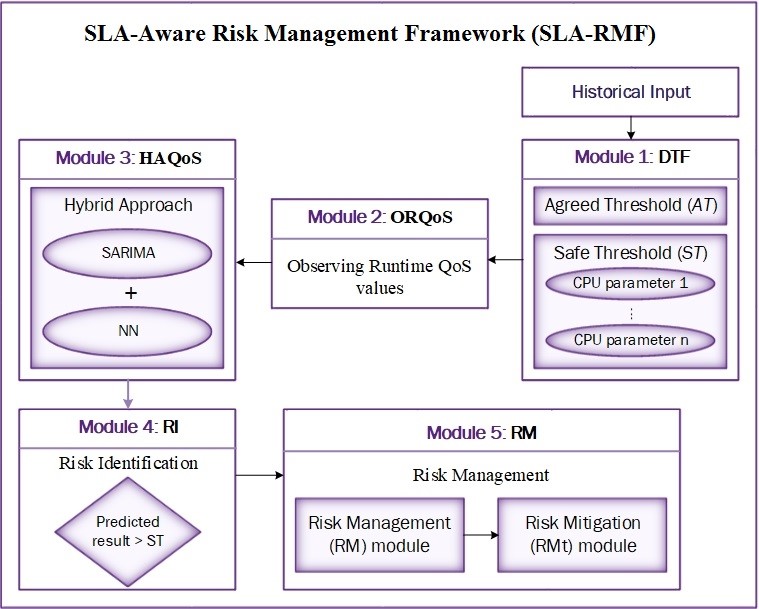

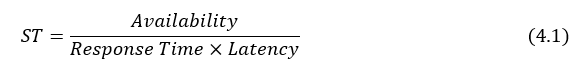

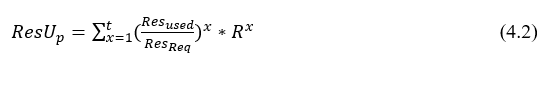

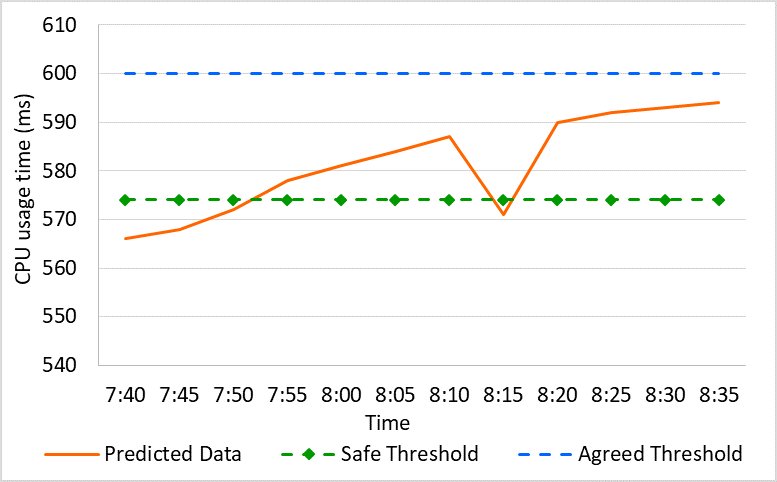

From a risk management standpoint, the research [75] created the SLA violation discovery and minimization process. To regulate SLA breaches, they suggested RMF-SLA, a Risk Management-based abatement method. SLA monitoring, violation prediction, and decision reference are all part of the SLA development process.

The study [76] proposed using a quality management criterion to improve cloud storage SLAs and meet industry QoS standards on both levels. The platform employs Reinforcement Learning (RL) to provide a technique for employing VM that can respond to program changes in order to ensure QoS for all client groups. Infrastructure costs, network capacity, and service demands are all factors in these improvements.

The study [77] offered two energy-efficient approaches based on consolidation that decreases energy usage and SLA breaches. They also improved the current Enhanced-Conscious Task Consolidation (ECTC) and Maximum Utilization (MaxUtil) methods, which aim to cut down on energy usage and SLA breaches. In basis of energy, SLA, and migrations, the suggested approach performs well. The suggested approaches achieve the aforementioned objectives by picking the most energy-efficient and CPU-capable servers.

Authors in [78] proposed a Risk Supervision technique for preventing SLA breaches (RMF-SLA), which assists cloud facility operators in reducing the risk of facility violations. This technique employs the Fuzzy Inference System (FIS), which investigates inputs such as the predicted trajectory of customer behavior, the supplier’s danger mindset, and the service’s dependability.